Writing Tips from the PDB: Conveying Uncertainty

I recently shared tips to write effectively for busy people, used at the CIA writing for the President’s Daily Brief (PDB) and now for…

I recently shared tips to write effectively for busy people, used at the CIA writing for the President’s Daily Brief (PDB) and now for private sector executives. And I illustrated them with Star Wars/Trek examples. This post will go deeper on how to convey uncertainty effectively, sticking with Wars/Trek examples.

In my previous article, I said it’s important to be very careful about terms like “probably”, “likely”, “may”, “could”, etc. These terms can be ambiguous and people interpret them in different ways. It’s also often hard to be more precise with the limited information you have. That said, sometimes you actually need to make a likelihood assessment.

Ok so let’s get into it. I’ll go into:

Use simple estimative language

No “weasel words” without conditionals

No percentages without real math

Predictions should be useful, not right

Avoid confidence statements if possible

1. Use the Simplest Estimative Language

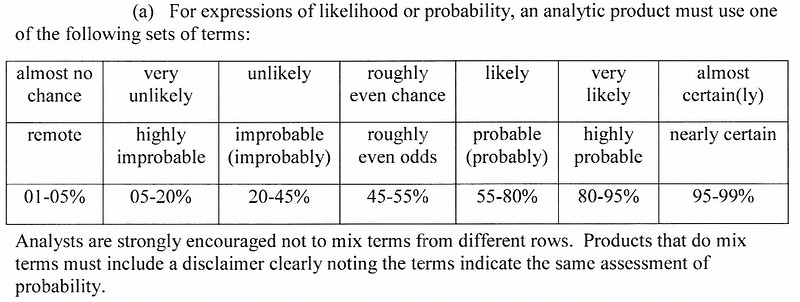

If you’ll bear with me into intelligence nerdom, Intelligence Community Directive 28 is a helpful place to start. It lists some estimative words (“likely”, “probably”, etc) that people generally interpret in the same way. And just in case they don’t, it explains how one’s supposed to interpret those words. From page 3:

In my view, this is a reasonable approach for high-consequence official assessments.

Like, say you’re assessing whether the Chancellor of the Galactic Republic is a Sith Lord.

There will certainly be an investigation by the Galacitc Senate. I’d want to be as specific as possible and include a chart like the above.

However, I find that for most work, having many gradations of probability and requiring a legend to understand them has two problems:

It’s still complex. The people you’re writing for don’t have the need to parse the difference between “probable” and “highly probable,” or refer back to a key like the above.

It implies certainty you don’t have. We’re doing social science here. Unless you’ve calculated the exact likelihood of something with data and math, you don’t actually know if something is 80–95% certain vs. >95% certain. Hence the more you specify gradations, the more you imply a certainty you don’t have.

To demonstrate, take a look at these three sentences. There’s a BIG difference between 1 and 3 , but I’m not sure what the difference is between 1 and 2, or 2 and 3:

It is likely that Chancellor Palpatine is a Sith Lord.

It is very likely that Chancellor Palpatine is a Sith Lord.

It is almost certain that Chancellor Palpatine is a Sith Lord.

So I offer instead this simplified guide. This uses the simplest language possible that still says exactly what you mean (see tip #3 in my original list):

This version is easier to understand and less likely to imply certainty you don’t have. You could of course replace the exact words with some alternatives; for example, there are a lot of ways to say “we don’t know” and you can replace “likely” with “probable”.

2. No “Weasel Words” Without Conditionals

On the flip side of the above, I want to flag a few no-nos.

First, no may, might, or could without a conditional. These are “weasel words,” in that they let you “weasel” out of having to actually say anything. In general, only use these when modified with an “if.” For example:

GOOD: The Federation might make peace with the Klingon Empire if the Empire experiences a disaster that makes it too costly to wage war. This lets me know something is possible only if a specific situation happens.

NOT GOOD: The Klingon moon Praxis could explode and create an environmental disaster that would force the Klingon Empire to sue for peace. This doesn’t tell me anything about whether this situation is likely.

3. No Percentages Without Real Math

Second, no specific numbers unless you’re actually using data and math and a statistics Ph.D. Without that, you do not have near enough certainty about an exact percentage chance (and even then you might not).

GOOD: There’s an 80% chance that overmining will cause the Klingon moon Praxis to explode in the next 6 months, based on a statistical analysis of previous overmining and moon explosion incidents.

NOT GOOD: There’s an 80% chance Palpatine is a Sith Lord, based on a few anecdotal situations in which he seemed to have a particularly evil voice.

4. Predictions Should Be Useful, Not Right

As they say, predictions are hard, especially about the future.

Assessing what is occuring NOW is in theory just a matter of more information. There is a truth, and if you have enough information, you can know that truth.

Assessing the future is inherently more unknowable. It depends on events with unpredictable interactions, or even worse, on decisions by peskily illogical humans.

For these situations, it matters less that your prediction is correct than that it is useful. Let’s use this example:

The Borg cube is on its way to Earth and is likely to destroy the planet.

This certainly seemed true after the cube destroyed the Federation fleet at Wolf 359. But then the Enterprise crew found way to destroy the cube remotely, after rescuing Captain Picard while still connected to the Borg “collective” hive mind.

More to the point though, that prediction was not useful. After the fleet was destroyed, it was pretty clear the Borg were in a good position to destroy Earth. What would’ve been MORE useful was an assessment of the weaknesses of the cube, like:

We are exploring ways to use Captain Picard’s continuing link to the Borg collective to remotely destroy the cube that threatens Earth.

There’s not even a prediction in there, but Federation leaders will probably want to know what those possibilities are. Just goes to show it’s more important to be useful than right.

5. Avoid Confidence Statements if Possible

I may differ with Intelligence Community orthodoxy here as I’ve rarely if ever found a good use for confidence statements.

These are phrases like “we have low/medium/high confidence in our assessment”, separate from what the assessment actually is.

I generally find there’s a way to be more precise about the original assessment, rather than tacking on a confidence level that muddies the waters.

Let’s take some of our examples:

It is almost certain that Chancellor Palpatine is a Sith Lord. We have high confidence because we saw him kill Mace Windu with the Force. But, if you didn’t have high confidence, this wouldn’t be almost certain. So what’s the point of the confidence statement?

The Borg cube is on its way to earth and is likely to destroy the planet. We have medium confidence because this is an unprecedented situation and the future is unpredictable. The unprecedented nature of the situation is what makes this likely instead of almost certain, so again what’s the point of the confidence statement?

Ok folks, there you have it, a guide to simply and clearly expressing uncertainty, and some pitfalls to avoid. Hope you enjoyed it, and stay tuned for (hopefully) one more article about formatting and using visuals and slide decks to express information clearly.